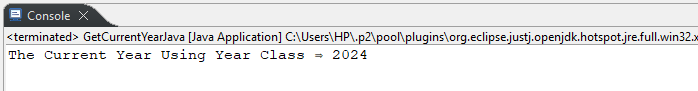

To get the current year in Java, you can use different built-in classes as well as third-party libraries, such as Calendar, Year, CalendarUtils, Joda Time, etc.

Java

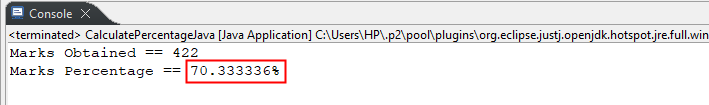

How to Calculate Percentage in Java

To calculate percentage in Java, you can use the standard percentage formula (i.e., percentage = (parts/whole) * 100) or BigDecimal class.

How to Find the Square Root of a Number in Java

To find the square root of a number in Java, use the methods like “sqrt()”, “pow()”, “binary search algorithm”, or “brute force”.

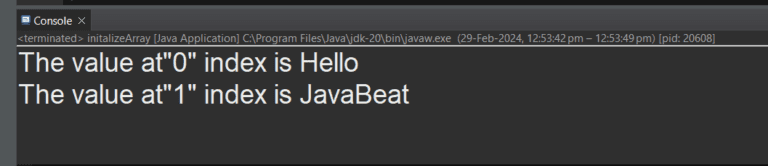

Initialize an Array in Java | Simple to Advanced

To initialize an array in Java, use the default value, “new” keyword, or call the built-in methods such as IntStream.range(), clone(), setAll(), etc.

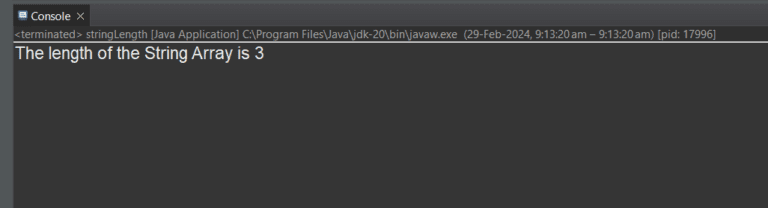

How to Get String Length in Java?

The length() method of the Java String and StringUtils class or the length property for Arrays are used to get the string length as shown in this article.

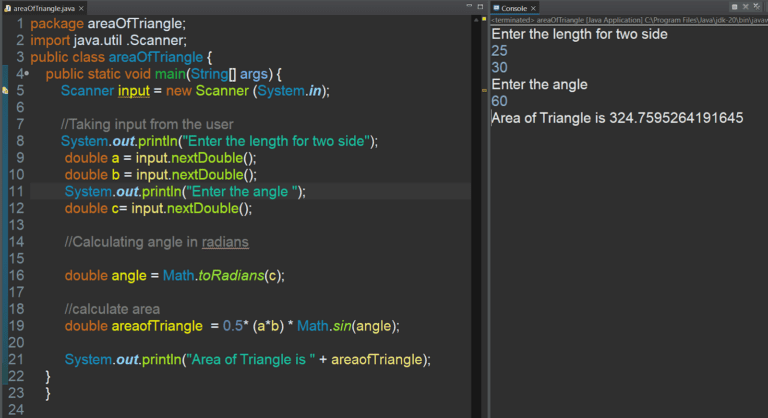

How to Find the Area of Triangle in Java?

To calculate the area of a triangle, use (base * height) / 2, implement Heron’s formula, or use the Math class methods, etc, as shown in this article.

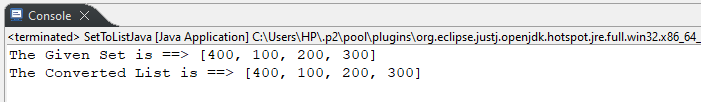

How to Convert Set to a List in Java?

To convert a Set to a List in Java, you can use different methods, such as “List.addAll()”, “List Constructor”, “Java 8 Stream API”, “copyOf()”, etc.

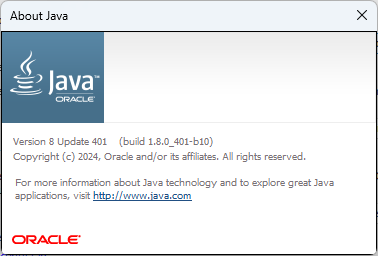

How to Check Java Version on Windows | Mac | Linux

To check the Java version on Windows, Linux, or Mac operating systems, run the “java –version” command from the respective terminal.